Filtered by: Scitech

SciTech

Google's brain-inspired software learns to describe what it sees

Using experimental software that mimicks the human brain, Google may soon give computers the ability to describe complex images.

Google's software is still in its early stages and is still prone to errors, the Massachussetts Institute of Technology's Technology Review said.

“It’s very exciting... I’m sure there are going to be some potential applications coming out of this,” said Oriol Vinyals, a research scientist at Google.

Technology Review said the software can "use complete sentences to accurately describe scenes shown in photos, a significant advance in the field of computer vision."

It added the software can even count.

While the work is just a research project for now, Vinyals said he and others at Google have already started thinking how to use it to enhance image search or help the visually impaired navigate, both online and in the real world.

Said Google Google Research scientists Oriol Vinyals, Alexander Toshev, Samy Bengio, and Dumitru Erhan in their blog post:

People can summarize a complex scene in a few words without thinking twice. It’s much more difficult for computers. But we’ve just gotten a bit closer -- we’ve developed a machine-learning system that can automatically produce captions (like the three above) to accurately describe images the first time it sees them. This kind of system could eventually help visually impaired people understand pictures, provide alternate text for images in parts of the world where mobile connections are slow, and make it easier for everyone to search on Google for images.

Like the brain

The new software stemmed from Google's research into using large collections of simulated neurons to process data.

"No one at Google programmed the new software with rules for how to interpret scenes. Instead, its networks 'learned' by consuming data," MIT Technology Review said.

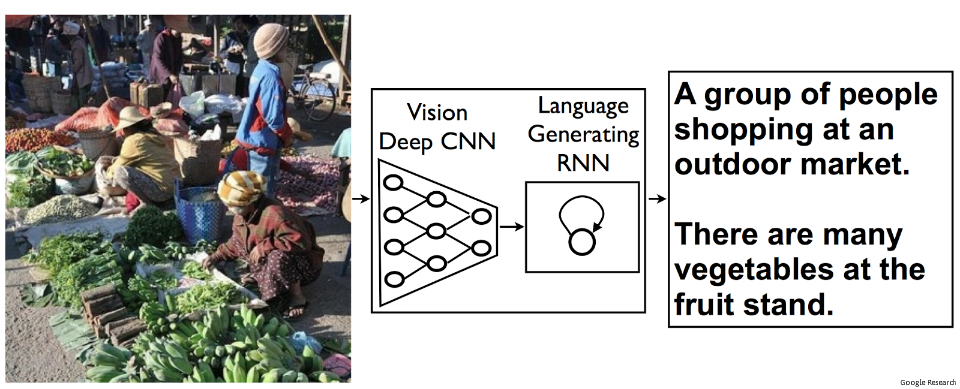

Google's researchers used a kind of digital brain surgery, "plugging together two neural networks developed separately for different tasks," it added.

Once combined, the first network can “look” at an image and then feed the mathematical description of what it “sees” into the second.

In turn, the second network uses that information to generate a human-readable sentence.

On the other hand, the combined network was trained to generate more accurate descriptions by showing it tens of thousands of images with descriptions written by humans.

“We’re seeing through language what it thought the image was,” said Vinyals.

After training

After "training," the software was unleashed on large data sets of images from Flickr and other sources and asked to describe them.

The accuracy of its descriptions was then judged with an automated test used to benchmark computer-vision software.

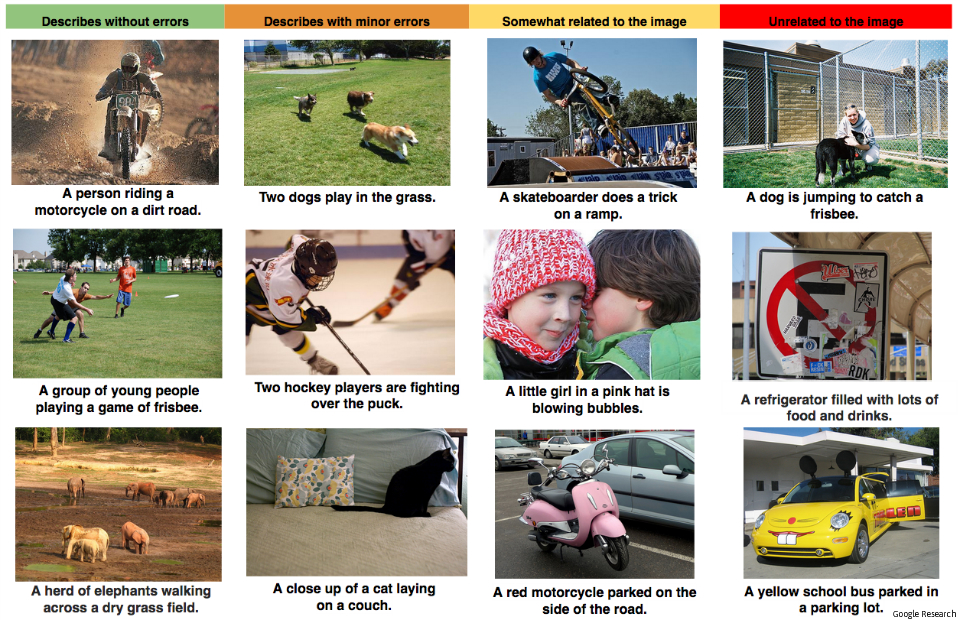

Some descriptions were spot-on, while others were a bit off the mark:

Some descriptions were spot-on, while others were a bit off the mark:

"Google’s software posted scores in the 60s on a 100-point scale. Humans doing the test typically score in 70s," it quoted Vinyals as saying.

But when Google asked humans to rate its software’s descriptions of images on a scale of 1 to 4, it averaged only 2.5.

Still, Technology Review said the result suggests Google is "far ahead of other researchers working to create scene-describing software."

By comparison, Stanford researchers recently published details of their own system, which scored between 40 and 50 on the same standard test.

Too early

Vinyals said researchers are still in the early stages of understanding how to create and test this software.

He predicted research on understanding and describing scenes will now intensify.

But one potential problem involves natural scenes, as there are "fewer labeled photos of more natural scenes." — Joel Locsin/TJD, GMA News

More Videos

Most Popular